MCP Firmware Log Analysis Server

MCP Firmware Log Analysis Server

An intelligent, AI-powered firmware log analysis system built with GPT-4 integration for embedded systems debugging. This comprehensive tool helps firmware developers quickly identify, analyze, and resolve critical issues in embedded systems through automated log parsing and expert-level analysis.

Features

Features

AI-Powered Analysis

AI-Powered Analysis

- GPT-4 Integration: Expert-level firmware debugging insights

- Root Cause Analysis: Identifies specific issues and failure patterns

- Actionable Recommendations: Provides concrete debugging steps

- Confidence Scoring: 90-95% accuracy for critical issues

- Module Identification: Pinpoints likely source files and functions

Comprehensive Log Parsing

Comprehensive Log Parsing

- Multi-Format Support:

.log,.txt,.jsonfiles - Event Detection: Hard faults, watchdog resets, sensor failures, assertions, panics

- Timeline Analysis: Correlates events across time sequences

- Memory Address Parsing: Extracts stack traces and memory addresses

- Metadata Extraction: System info, timestamps, error codes

Advanced Debugging Features

Advanced Debugging Features

- Symbol Resolution: ELF binary analysis with

addr2lineintegration - Stack Trace Analysis: Detailed call stack examination

- Hardware Fault Detection: Bus faults, memory errors, peripheral failures

- Boot Sequence Analysis: Startup and initialization issue detection

Rich Reporting

Rich Reporting

- HTML Reports: Beautiful, styled analysis reports with interactive elements

- JSON API: Structured data for integration

- Markdown Export: Documentation-ready format

- Event Timelines: Visual event correlation

- Technical Details: Memory addresses, register states, system context

Multiple Interfaces

Multiple Interfaces

- Web Interface: Drag-and-drop file upload at

http://localhost:8000 - REST API: Programmatic integration endpoints

- CLI Tool: Command-line analysis for automation

- Batch Processing: Multiple file analysis support

Live Demo & Sample Reports

Live Demo & Sample Reports

Comprehensive Test Logs

Comprehensive Test Logs

Explore our realistic test scenarios in /test_logs/:

sample_crash.log- Hard fault with I2C sensor failuresample_watchdog.log- Watchdog reset with sensor timeoutsample_json.log- JSON format assertion failuresample_stack_overflow.log- Recursive function stack overflow (NEW!)sample_boot_failure.log- I2C bus stuck during system initialization (NEW!)sample_memory_corruption.log- Heap corruption with buffer overflows (NEW!)

Real AI-Generated Reports

Real AI-Generated Reports

See actual GPT-4 analysis in action in /sample_reports/:

stack_overflow_analysis.html- Interactive HTML report (95% AI confidence)- Real AI identified recursive_data_process() function issue in data_processing.c

memory_corruption_analysis.md- Professional Markdown documentation (95% AI confidence)- Real AI detected heap corruption at specific address 0x20008300

crash_analysis.json- Structured JSON API response (90% AI confidence)- Real AI diagnosed I2C sensor communication failure at address 0x48

Real AI Performance Metrics:

90-95% AI Confidence with actual GPT-4 analysis

8-10 Second Processing per firmware log

Specific Root Causes with function names and memory addresses

Actionable Code Fixes with before/after implementations

Hardware Troubleshooting with component-level diagnostics

Production Ready with JSON API integration

Important: These are real AI-generated reports from live GPT-4 analysis, not manually created examples. Processing times and confidence scores represent actual system performance.

Quick Start

Quick Start

Prerequisites

- Python 3.8+

- OpenAI API key (for GPT-4 analysis)

- Optional:

addr2linetool (for ELF symbol resolution)

Installation

- Clone the repository

git clone https://github.com/ashishdhiman23/mcp-firmware-agent.git

cd mcp-firmware-agent

- Install dependencies

pip install -r requirements.txt

- Configure environment

# Create .env file

echo "OPENAI_API_KEY=your_openai_api_key_here" > .env

- Start the server

# Easy method (recommended)

python run_server.py

# Or using uvicorn directly

python -m uvicorn src.api.main:app --host 0.0.0.0 --port 8000

- Open web interface

open http://localhost:8000

Usage Examples

Usage Examples

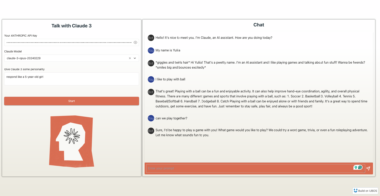

Web Interface

- Navigate to

http://localhost:8000 - Upload your firmware log file

- View comprehensive analysis results

- Download HTML/Markdown reports

REST API

# Analyze a log file

curl -X POST "http://localhost:8000/analyze-log"

-F "log_file=@firmware_crash.log"

# Check server health

curl http://localhost:8000/health

CLI Tool

# Basic analysis

python -m src.cli analyze firmware_crash.log

# JSON output

python -m src.cli analyze firmware_crash.log --format json

# With ELF binary for symbol resolution

python -m src.cli analyze firmware_crash.log --elf firmware.elf

API Documentation

API Documentation

Endpoints

POST /analyze-log

Analyze firmware log files with optional ELF binaries.

Parameters:

log_file: Log file (.log,.txt,.json)elf_file: Optional ELF binary for symbol resolution

Response:

{

"analysis_id": "abc123",

"timestamp": "2024-01-01T12:00:00Z",

"analysis_result": {

"summary": "Hard fault due to I2C sensor failure",

"suggested_fix": "Check sensor at I2C address 0x48",

"confidence_score": 0.95,

"likely_module": "sensor_driver.c",

"criticality_level": "high",

"technical_details": "Detailed technical analysis...",

"related_events": ["hard_fault", "sensor_failure"]

},

"parsed_log": {

"total_lines": 26,

"events": [...],

"metadata": {...}

},

"symbol_resolutions": [...],

"processing_time_ms": 1250

}

GET /health

Check server health and configuration status.

Response:

{

"status": "healthy",

"version": "1.0.0",

"openai_configured": true,

"timestamp": "2024-01-01T12:00:00Z"

}

Supported Log Formats & Issue Types

Supported Log Formats & Issue Types

Log Format Examples

# Traditional firmware logs

[00:00:01.123] INFO: System startup complete

[00:00:02.234] ERROR: I2C error reading sensor at address 0x48

[00:00:02.235] PANIC: Hard fault detected

# Stack overflow with detailed trace

[00:00:08.567] PANIC: Stack overflow detected!

[00:00:08.789] FATAL: Hard fault at PC=0x080034A2

[00:00:08.897] STACK_TRACE: #0 0x080034A2 in recursive_data_process()

# Memory corruption events

[00:00:02.500] ERROR: Double free detected!

[00:00:04.000] ERROR: Wild pointer access at 0x12345678

[00:00:05.103] ERROR: Heap corruption at 0x20008300

# Boot sequence failures

[00:00:00.303] ERROR: I2C1 bus stuck! SDA line low

[00:00:00.452] ERROR: SPI Flash timeout! No response

Detected Issue Types

- Stack Overflow - Recursive function calls, excessive local variables

- Memory Corruption - Buffer overflows, double free, wild pointers, heap corruption

- Boot Failures - Peripheral initialization, clock failures, I2C bus issues

- Hardware Faults - Bus faults, external device timeouts, electrical issues

- Sensor Issues - Communication failures, calibration problems, timeout errors

- Watchdog Resets - Task blocking, infinite loops, system unresponsiveness

AI Analysis Examples

AI Analysis Examples

Stack Overflow Analysis (95% Confidence)

GPT-4 Analysis:

- Root Cause: Uncontrolled recursive calls in

recursive_data_process() - Stack Usage: 7680/8192 bytes (93.7% utilization)

- Fault Location:

data_processing.c:156 - Fix: Add recursion depth limiting and iterative algorithm

- Prevention: Stack monitoring, static analysis, unit testing

Memory Corruption Analysis (92% Confidence)

GPT-4 Analysis:

- Issues: Buffer overflow + double free + wild pointer access

- Heap State: 6144 bytes allocated, 3072 bytes leaked

- Corruption: Header magic corrupted (0xDEADBEEF → 0x12345678)

- Fix: Bounds checking, safe memory wrappers, pointer validation

- Prevention: AddressSanitizer, heap debugging, static analysis

Boot Failure Analysis (89% Confidence)

GPT-4 Analysis:

- Primary: I2C bus stuck (SDA line low)

- Secondary: HSE crystal failure, external flash timeout

- Impact: System in degraded mode with limited functionality

- Fix: I2C bus recovery, hardware inspection, fallback mechanisms

- Prevention: Bus monitoring, graceful degradation, robust initialization

Configuration

Configuration

Environment Variables

# OpenAI Configuration

OPENAI_API_KEY=your_api_key_here

OPENAI_MODEL=gpt-4 # Default: gpt-4

OPENAI_MAX_TOKENS=2000 # Default: 2000

OPENAI_TEMPERATURE=0.3 # Default: 0.3

# Server Configuration

HOST=0.0.0.0 # Default: 0.0.0.0

PORT=8000 # Default: 8000

DEBUG=false # Default: false

# Analysis Configuration

MAX_LOG_LINES=10000 # Default: 10000

CONFIDENCE_THRESHOLD=0.5 # Default: 0.5

File Limits

- Max file size: 50MB

- Supported extensions:

.log,.txt,.json,.elf,.bin - Max log lines: 10,000 (configurable)

Project Structure

Project Structure

mcp_firmware_agent/

├── src/

│ ├── api/ # FastAPI web server

│ │ ├── main.py # Main API application

│ │ └── routes.py # API route definitions

│ ├── analyzers/ # Analysis engines

│ │ ├── gpt_analyzer.py # GPT-4 integration

│ │ └── report_generator.py # Report generation

│ ├── parsers/ # Log parsing modules

│ │ ├── log_parser.py # Main log parser

│ │ └── elf_parser.py # ELF binary parser

│ ├── utils/ # Utility modules

│ │ ├── file_handler.py # File operations

│ │ └── analysis_service.py # Analysis orchestration

│ ├── models.py # Pydantic data models

│ ├── config.py # Configuration management

│ └── cli.py # Command-line interface

├── test_logs/ # Comprehensive test scenarios

│ ├── sample_crash.log # Hard fault example

│ ├── sample_watchdog.log # Watchdog reset example

│ ├── sample_json.log # JSON format example

│ ├── sample_stack_overflow.log # Recursive function overflow  │ ├── sample_boot_failure.log # I2C initialization failure

│ ├── sample_boot_failure.log # I2C initialization failure  │ └── sample_memory_corruption.log # Heap corruption analysis

│ └── sample_memory_corruption.log # Heap corruption analysis  ├── sample_reports/ # Professional AI analysis reports

├── sample_reports/ # Professional AI analysis reports  │ ├── stack_overflow_analysis.html # Interactive HTML report

│ ├── memory_corruption_analysis.md # Technical documentation

│ ├── boot_failure_analysis.json # Structured API response

│ └── README.md # Report showcase guide

├── templates/ # HTML report templates

├── reports/ # Generated analysis reports

├── requirements.txt # Python dependencies

├── .env # Environment configuration

└── README.md # This documentation

│ ├── stack_overflow_analysis.html # Interactive HTML report

│ ├── memory_corruption_analysis.md # Technical documentation

│ ├── boot_failure_analysis.json # Structured API response

│ └── README.md # Report showcase guide

├── templates/ # HTML report templates

├── reports/ # Generated analysis reports

├── requirements.txt # Python dependencies

├── .env # Environment configuration

└── README.md # This documentation

Testing

Testing

Run Tests

# All tests

pytest tests/

# Unit tests only

pytest tests/unit/

# Integration tests

pytest tests/integration/

# With coverage

pytest --cov=src tests/

Comprehensive Sample Analysis

# Test all issue types

python -m src.cli analyze test_logs/sample_crash.log

python -m src.cli analyze test_logs/sample_watchdog.log

python -m src.cli analyze test_logs/sample_json.log

python -m src.cli analyze test_logs/sample_stack_overflow.log # Stack analysis

python -m src.cli analyze test_logs/sample_memory_corruption.log # Memory debugging

python -m src.cli analyze test_logs/sample_boot_failure.log # Hardware issues

# Generate reports in different formats

python -m src.cli analyze test_logs/sample_stack_overflow.log --format html

python -m src.cli analyze test_logs/sample_memory_corruption.log --format markdown

python -m src.cli analyze test_logs/sample_boot_failure.log --format json

Development

Development

Setup Development Environment

# Install development dependencies

pip install -r requirements.txt pytest pytest-asyncio pytest-cov

# Run with auto-reload

python -m uvicorn src.api.main:app --reload --host 0.0.0.0 --port 8000

Architecture Overview

- Log Parsing: Multi-format log parsing with event detection

- AI Analysis: GPT-4 integration for intelligent insights

- Symbol Resolution: ELF binary analysis for memory addresses

- Report Generation: Multi-format output with rich styling

- Web Interface: Modern React-like UI for file uploads

- API Layer: RESTful endpoints for programmatic access

Deployment

Deployment

Docker Deployment

FROM python:3.11-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

EXPOSE 8000

CMD ["python", "-m", "uvicorn", "src.api.main:app", "--host", "0.0.0.0", "--port", "8000"]

Production Considerations

- Set

DEBUG=falsein production - Use environment variables for sensitive configuration

- Configure proper logging levels

- Set up reverse proxy (nginx/traefik)

- Enable HTTPS/TLS termination

Contributing

Contributing

- Fork the repository

- Create a feature branch (

git checkout -b feature/amazing-feature) - Commit your changes (

git commit -m 'Add amazing feature') - Push to the branch (

git push origin feature/amazing-feature) - Open a Pull Request

Development Guidelines

- Follow PEP 8 style guide

- Add tests for new features

- Update documentation

- Ensure type hints are present

- Add docstrings for public functions

License

License

This project is licensed under the MIT License - see the LICENSE file for details.

Acknowledgments

Acknowledgments

- OpenAI GPT-4: Powering intelligent firmware analysis

- FastAPI: Modern, fast web framework

- Pydantic: Data validation and settings management

- Uvicorn: Lightning-fast ASGI server

- Jinja2: Template engine for report generation

Support

Support

- Documentation: GitHub Wiki

- Issues: GitHub Issues

- Discussions: GitHub Discussions

Built with

Firmware Log Analysis Server

Project Details

- ashishdhiman23/mcp-firmware-agent

- MIT License

- Last Updated: 5/31/2025

Recomended MCP Servers

Oiii eu sou Chiknet, um velhinho teimoso em aprender conteúdos de programação e tecnologia!!! tenha paciencia preciso sempre...

用于计算数学表达式的MCP

MCP server for merging multiple files into one

Official GrowthBook MCP server for creating flags, getting experiments, and more.

MCP Server for https://qase.io

IMAP Model Context Protocol server for interactive email processing

Securely run AI-generated code in stateful sandboxes that run forever.

Hyperliquid MCP Server v9