Frequently Asked Questions about Langfuse MCP Server

Q: What is an MCP Server? A: MCP stands for Model Context Protocol. An MCP Server acts as a bridge between AI models (especially Large Language Models or LLMs) and external data sources or tools. It standardizes how applications provide context to LLMs, ensuring AI agents have the information they need to make informed decisions.

Q: What is Langfuse and why is it important? A: Langfuse is a platform designed for monitoring, analyzing, and improving the performance of LLM-powered applications. It provides insights into how your models are performing, allowing you to identify areas for optimization and improvement.

Q: What does the Langfuse MCP Server do? A: The Langfuse MCP Server is a specific implementation of the MCP protocol that allows AI assistants to interact with Langfuse workspaces. It enables AI models to query LLM metrics by time range and provides seamless integration with Langfuse.

Q: What are the key features of the Langfuse MCP Server? A: Key features include the ability to query LLM metrics in real-time and historically, customizable queries, easy integration with existing AI infrastructure, scalability, and secure communication.

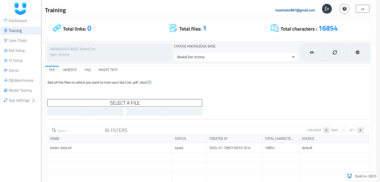

Q: What environment variables are required to run the Langfuse MCP Server?

A: The required environment variables are: LANGFUSE_DOMAIN (the Langfuse domain, defaulting to https://api.langfuse.com), LANGFUSE_PUBLIC_KEY (your Langfuse Project Public Key), and LANGFUSE_PRIVATE_KEY (your Langfuse Project Private Key).

Q: How do I install the Langfuse MCP Server?

A: You can install the server using npm with the command npm install shouting-mcp-langfuse.

Q: Can I run the server as a CLI tool?

A: Yes, you can run the server as a CLI tool after setting the required environment variables. Use the command mcp-server-langfuse.

Q: How can I use the Langfuse MCP Server in my code? A: You can import the necessary modules and initialize the server and client with your Langfuse credentials, as shown in the example code provided in the documentation.

Q: What kind of metrics can I get with this server? A: The server allows you to retrieve LLM metrics by time range, giving you insights into the performance of your models over time.

Q: What license does the Langfuse MCP Server use? A: The server is licensed under the ISC license.

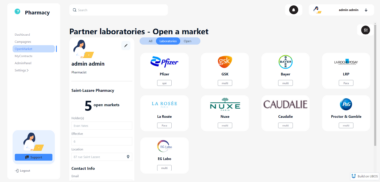

Q: How does the Langfuse MCP Server integrate with UBOS? A: The Langfuse MCP Server is a valuable asset on the UBOS Asset Marketplace. When used with UBOS, it enables users to orchestrate AI Agents, connect them with enterprise data, and build custom AI Agents and Multi-Agent Systems with enhanced context awareness and monitoring capabilities.

Q: Where can I find the repository for the Langfuse MCP Server? A: The repository is located at https://github.com/z9905080/mcp-langfuse.

Langfuse Integration Server

Project Details

- z9905080/mcp-langfuse

- shouting-mcp-langfuse

- Apache License 2.0

- Last Updated: 3/14/2025

Recomended MCP Servers

A TypeScript implementation of a flight & stay search MCP server that uses the Duffel API to search...

Gladia MCP

A Binary Ninja plugin containing an MCP server that enables seamless integration with your favorite LLM/MCP client.

an MCP which supports both SMTP and IMAP

An MCP service for Ant Design components query | 一个 Ant Design 组件查询的 MCP 服务,包含组件文档、API 文档、代码示例和更新日志查询

An MCP server to help you "play with your documents" via Docling

A nomad MCP Server (modelcontextprotocol)

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.