MCP Memory: Empowering AI Clients with Persistent Memory

In the rapidly evolving landscape of AI and Large Language Models (LLMs), context is king. Applications need to understand user preferences, past interactions, and evolving needs to deliver truly personalized and effective experiences. This is where MCP Memory steps in, providing a robust and scalable solution for giving MCP Clients (like Cursor, Claude, and Windsurf) the ability to remember information about users across conversations.

MCP Memory is a specialized MCP Server, leveraging cutting-edge vector search technology to move beyond simple keyword matching. It understands the semantic meaning of information, allowing it to retrieve relevant memories even when the exact words aren’t present in a query. Built entirely on Cloudflare’s powerful infrastructure, including Workers, D1, Vectorize (RAG), Durable Objects, Workers AI, and Agents, MCP Memory offers a cost-effective and highly performant solution for AI memory management.

Key Features and Benefits:

- Persistent User Memory: Enables AI clients to remember user preferences, behaviors, and past interactions across multiple conversations. This leads to more personalized and context-aware AI experiences.

- Semantic Vector Search: Uses vector embeddings to understand the meaning of text, allowing for more accurate and relevant memory retrieval compared to keyword-based approaches. This ensures that the AI client receives the most pertinent information, even if the user phrases their query differently.

- MCP Protocol Compliance: Adheres to the Model Context Protocol (MCP), ensuring seamless integration with other MCP-compatible AI agents and tools.

- Cloudflare-Native Architecture: Leverages the power and scalability of Cloudflare’s infrastructure, including Workers, D1, Vectorize, Durable Objects, and Workers AI, providing a reliable and cost-effective solution.

- Open-Source and Customizable: Offers full control over your data and the ability to customize the system to meet your specific needs.

- Easy Deployment: Can be deployed with a single click to Cloudflare, making it easy to get started quickly. Alternative deployment options are also available for more advanced users.

- Cost-Effective: Free for most users due to Cloudflare’s generous free tier for Workers, Vectorize, Worker AI, and D1 database.

- Secure: Implements several security measures to protect user data, including isolated namespaces, rate limiting, and TLS encryption.

Use Cases:

MCP Memory unlocks a wide range of possibilities for AI-powered applications, including:

- Personalized AI Assistants: Create AI assistants that remember user preferences, past conversations, and individual needs to provide more tailored and helpful responses.

- Context-Aware Chatbots: Build chatbots that understand the context of the conversation and can provide more relevant and accurate information.

- Improved Customer Service: Enhance customer service interactions by providing agents with access to a customer’s past interactions and preferences.

- Smarter E-commerce Recommendations: Provide more personalized product recommendations based on a user’s browsing history and past purchases.

- Enhanced Educational Tools: Create educational tools that adapt to a student’s learning style and track their progress over time.

- Optimized Code Completion and Generation: IDE can remember context and provide better auto-completion and code-generation suggestions.

- Better meeting summary and key point extraction: AI Agent can remember context of ongoing meeting to give better summary.

How MCP Memory Works:

The architecture of MCP Memory is designed for efficiency, scalability, and security:

- Storing Memories: When new text is stored, it is processed by Cloudflare Workers AI using the open-source

@cf/baai/bge-m3model to generate embeddings. These embeddings capture the semantic meaning of the text. - Data Storage: The text and its vector embedding are stored in two separate locations: Cloudflare Vectorize stores the vector embeddings for similarity search, while Cloudflare D1 stores the original text and metadata for persistent storage.

- Durable Object Management: A Durable Object (MyMCP) manages the state and ensures consistency between the Vectorize index and the D1 database.

- Retrieving Memories: When a query is received, it is converted to a vector using Workers AI with the same

@cf/baai/bge-m3model. - Similarity Search: Vectorize performs a similarity search to find relevant memories based on the vector embeddings. The results are ranked by similarity score.

- Data Retrieval: The D1 database provides the original text for the matched vectors.

This architecture allows for fast vector similarity search, persistent storage, stateful operations, standardized AI interactions, and MCP protocol compliance.

Getting Started with MCP Memory:

Deploying your own instance of MCP Memory is easy with Cloudflare. You can use the one-click deploy button, use the template, or use Cloudflare CLI. Detailed instructions are provided in the MCP Memory documentation.

MCP Memory and UBOS: A Powerful Combination

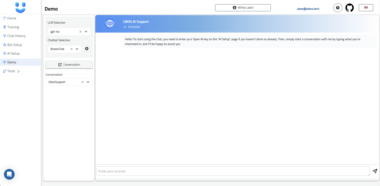

While MCP Memory provides a crucial component for enabling persistent memory in AI applications, it is even more powerful when combined with a comprehensive AI Agent development platform like UBOS. UBOS is a full-stack AI Agent Development Platform focused on bringing AI Agents to every business department. Our platform helps you orchestrate AI Agents, connect them with your enterprise data, build custom AI Agents with your LLM model, and create Multi-Agent Systems.

Here’s how UBOS complements MCP Memory:

- Orchestration: UBOS provides the tools to orchestrate multiple AI Agents, allowing you to build complex workflows that leverage MCP Memory for context and personalization.

- Data Integration: UBOS makes it easy to connect your AI Agents to your enterprise data sources, providing them with the information they need to make informed decisions.

- Custom AI Agent Development: UBOS allows you to build custom AI Agents with your own LLM models, tailoring them to your specific needs.

- Multi-Agent Systems: UBOS supports the creation of Multi-Agent Systems, enabling multiple AI Agents to collaborate and solve complex problems.

- Centralized Management and Monitoring: UBOS offers centralized management and monitoring tools, allowing you to track the performance of your AI Agents and identify areas for improvement. With UBOS, you gain features for fine-grained logging, traceability and can easily setup experiments for rapid testing.

By combining MCP Memory with UBOS, you can build truly intelligent and personalized AI applications that drive business value.

Conclusion:

MCP Memory is a game-changer for AI applications, providing a scalable and cost-effective solution for enabling persistent user memory. Whether you’re building personalized AI assistants, context-aware chatbots, or smarter e-commerce recommendations, MCP Memory can help you deliver more engaging and effective experiences. Combined with UBOS, you can unlock the full potential of AI and build truly intelligent applications that transform your business. Consider UBOS when it comes to building next gen AI Agent applications. Consider the possibilities of building AI Agents with MCP Memory.

MCP Memory

Project Details

- moe-Kuroko/mcp-memory

- Last Updated: 4/28/2025

Recomended MCP Servers

Official Model Context Protocol server for Gyazo

An MCP service designed for deploying HTML content to EdgeOne Pages and obtaining an accessible public URL.

:octocat: A curated awesome list of lists of interview questions. Feel free to contribute! :mortar_board:

An implementation of the Model Context Protocol for the World Bank open data API

An MCP server for people who surf waves and the web.

A Model Context Protocol (MCP) server for Apache Dolphinscheduler. This provides access to your Apache Dolphinshcheduler RESTful API...

一个MCP服务器,基于Brave API让你的Claude Cline以及Langchain实现网络搜索功能。An MCP server that use Brave API allows your Claude Cline and Langchain to implement network...

An open source framework for building AI-powered apps with familiar code-centric patterns. Genkit makes it easy to develop,...

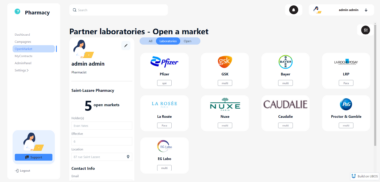

E-Commerce Demo Application

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.