Frequently Asked Questions about aicommit

Q: What is aicommit?

A: Aicommit is a command-line tool that uses Large Language Models (LLMs) to generate concise and descriptive Git commit messages automatically.

Q: How does aicommit work?

A: Aicommit analyzes your code changes using LLMs and generates a commit message that accurately reflects the modifications you’ve made.

Q: What LLM providers are supported by aicommit?

A: Aicommit supports OpenRouter, Simple Free OpenRouter, Ollama, and OpenAI-compatible API endpoints.

Q: What is Simple Free Mode in aicommit?

A: Simple Free Mode allows you to use OpenRouter’s free models without needing to manually select them. Aicommit automatically selects the best available free model and handles failover and performance tracking.

Q: How do I install aicommit?

A: You can install aicommit using npm with the command npm install -g @suenot/aicommit or using cargo with cargo install aicommit.

Q: How do I generate a commit message with aicommit?

A: After installing aicommit, stage your changes with git add . and then run aicommit to generate a commit message. Alternatively, you can use aicommit --add to automatically stage and commit your changes.

Q: Can I customize the generated commit messages?

A: Yes, you can customize the behavior of aicommit by editing the configuration file located at ~/.aicommit.json. You can modify settings like the active LLM provider, maximum tokens, and temperature.

Q: Is there a VS Code extension for aicommit?

A: Yes, there is a VS Code extension available for seamless integration with the editor. See the VS Code Extension README for more details.

Q: What is the watch mode in aicommit?

A: The watch mode allows you to automatically commit changes when files are modified. Use aicommit --watch to continuously monitor files and commit changes as they occur.

Q: How do I manage model jails and blacklists?

A: Aicommit provides commands for managing model jails and blacklists. Use aicommit --jail-status to show the current status of all models. Use aicommit --unjail="model_id" to release a specific model, and aicommit --unjail-all to release all models.

Q: How can I configure aicommit to use LM Studio as an OpenAI-compatible API endpoint?

A: Start LM Studio, load a model, and start the server. Then, configure aicommit by selecting ‘OpenAI Compatible’ and setting the API Key to lm-studio, API URL to http://localhost:1234/v1/chat/completions (or the URL shown in LM Studio), and Model to lm-studio-model (or any descriptive name).

Q: What does ‘Tokens: X↑ Y↓’ mean in the output?

A: In the output, ‘Tokens: X↑ Y↓’ indicates the number of tokens used, where X↑ is the number of input tokens and Y↓ is the number of output tokens.

AI Commit Message Generator

Project Details

- suenot/aicommit

- MIT License

- Last Updated: 5/18/2025

Recomended MCP Servers

Config files for my GitHub profile.

MCP server that uses arxiv-to-prompt to fetch and process arXiv LaTeX sources for precise interpretation of mathematical expressions...

single cell amateur

MCP server that visually reviews your agent's design edits

A Model Context Protocol (MCP) server for generating simple QR codes. Support custom QR code styles.

mcp server for cloudflare flux schnell worker api.

A mockup full stack app built with React, FastAPI, MongoDB, and Docker, powered by CLIP for multi-tagging and...

MCP server for interacting with Apache Iceberg catalog from Claude, enabling data lake discovery and metadata search through...

A Model Context Protocol (MCP) server for Gmail integration in Claude Desktop with auto authentication support. This server...

A Model Context Protocol (MCP) server that provides weather information and alerts using the National Weather Service (NWS)...

《使用T4批量生成Model和基于Dapper的DAL》

youtube embedding

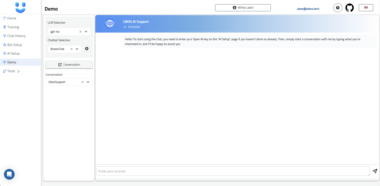

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.