Frequently Asked Questions about MCP Server

Q: What is MCP Server?

A: MCP Server is a high-throughput and memory-efficient inference and serving engine for Large Language Models (LLMs). It’s designed to optimize LLM performance, making it faster, cheaper, and more efficient.

Q: How does MCP Server improve LLM performance?

A: MCP Server uses techniques like PagedAttention for efficient memory management, continuous batching of requests, and optimized CUDA kernels for fast model execution.

Q: What is PagedAttention?

A: PagedAttention is a memory management technique that efficiently handles attention key and value memory, reducing memory consumption and improving performance, especially for large models.

Q: Which models are supported by MCP Server?

A: MCP Server seamlessly supports most popular open-source models on Hugging Face, including Transformer-like LLMs (e.g., Llama), Mixture-of-Expert LLMs (e.g., Mixtral), Embedding Models (e.g., E5-Mistral), and Multi-modal LLMs (e.g., LLaVA).

Q: What kind of hardware is compatible with MCP Server?

A: MCP Server supports NVIDIA GPUs, AMD CPUs and GPUs, Intel CPUs and GPUs, PowerPC CPUs, TPU, and AWS Neuron.

Q: Does MCP Server support quantization?

A: Yes, MCP Server supports GPTQ, AWQ, INT4, INT8, and FP8 quantization, allowing you to optimize model size and performance.

Q: How can I install MCP Server?

A: You can install MCP Server using pip install vllm or from source. Refer to the vLLM documentation for detailed instructions.

Q: Is there an API available for MCP Server?

A: Yes, MCP Server has an OpenAI-compatible API server, making it easy to integrate into existing workflows.

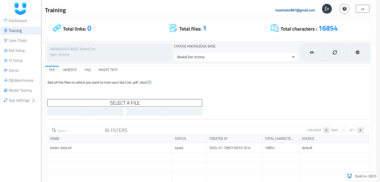

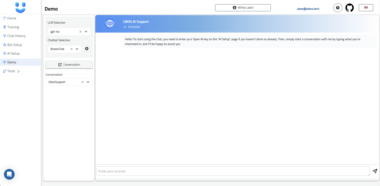

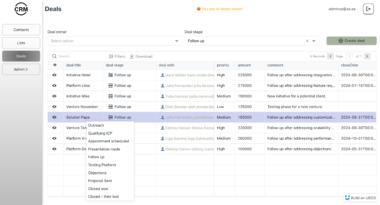

Q: How does MCP Server integrate with UBOS?

A: MCP Server is available on the UBOS Asset Marketplace, making it easy to deploy and manage within the UBOS ecosystem. UBOS simplifies deployment, provides centralized management, and enables data integration for enhanced LLM performance.

Q: Where can I find more information about MCP Server?

A: You can find more information on the vLLM website and in the vLLM documentation.

Q: How can I contribute to MCP Server development?

A: Contributions are welcome! Check out the CONTRIBUTING.md file for information on how to get involved.

vLLM

Project Details

- qijsi/vllm

- Apache License 2.0

- Last Updated: 3/3/2025

Recomended MCP Servers

MCP Server for Zerocracy: add it to Claude Desktop and enjoy vibe-management

mcp server for openweather free api

MCP Deep Research is a tool that allows you to search the web for information. It is built...

OpenWorkspace-o1 S3 Model Context Protocol Server.

为Cursor设计的MCP服务器安装程序,轻松扩展AI能力

Preference Editor MCP server

MCP server to assist with AI code generation using Claude Desktop, Claude Code or any coding tool that...

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.