Frequently Asked Questions

What is the MCP Server? The MCP Server is an open protocol that standardizes how applications provide context to LLMs, acting as a bridge to allow AI models to access and interact with external data sources and tools.

How does MCP Server integrate with OpenAI tools? MCP Server acts as a wrapper for OpenAI’s built-in tools, enabling seamless integration and protocol translation between OpenAI’s proprietary systems and the open MCP standard.

What are the benefits of using MCP Server with UBOS? Using MCP Server with UBOS enhances AI capabilities by providing a full-stack development platform that allows for the orchestration of AI Agents, integration with enterprise data, and the creation of custom AI solutions.

Is MCP Server compatible with other AI platforms? Yes, MCP Server is designed to be interoperable with various platforms and applications, thanks to its adherence to open standards and full compatibility with the MCP SDK.

How can I get started with MCP Server? To get started, you can install the MCP Server package from PyPI, configure your system with an OpenAI API key, and follow the setup instructions to integrate it with your existing applications.

OpenAI Tool Bridge

Project Details

- alohays/openai-tool2mcp

- MIT License

- Last Updated: 4/6/2025

Recomended MCP Servers

Dify 1.0 Plugin Convert your Dify tools's API to MCP compatible API

The official TypeScript library for the Dodo Payments API

AI Observability & Evaluation

This project demonstrates how to use EdgeOne Pages Functions to retrieve user geolocation information and integrate it with...

可用于cursor 集成 mcp server

Model Context Protocol server for Replicate's API

An MCP (Model Context Protocol) server that provides Ethereum blockchain data tools via Etherscan's API. Features include checking...

A model context protocol server to work with JetBrains IDEs: IntelliJ, PyCharm, WebStorm, etc. Also, works with Android...

A Model Context Protocol (MCP) server for research and documentation assistance using Perplexity AI. Won 1st @ Cline...

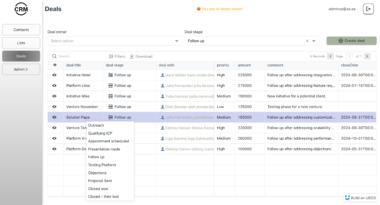

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.