Databricks MCP Server

A Model Context Protocol (MCP) server that connects to Databricks API, allowing LLMs to run SQL queries, list jobs, and get job status.

Features

- Run SQL queries on Databricks SQL warehouses

- List all Databricks jobs

- Get status of specific Databricks jobs

- Get detailed information about Databricks jobs

Prerequisites

- Python 3.7+

- Databricks workspace with:

- Personal access token

- SQL warehouse endpoint

- Permissions to run queries and access jobs

Setup

- Clone this repository

- Create and activate a virtual environment (recommended):

python -m venv .venv source .venv/bin/activate # On Windows: .venvScriptsactivate - Install dependencies:

pip install -r requirements.txt - Create a

.envfile in the root directory with the following variables:DATABRICKS_HOST=your-databricks-instance.cloud.databricks.com DATABRICKS_TOKEN=your-personal-access-token DATABRICKS_HTTP_PATH=/sql/1.0/warehouses/your-warehouse-id - Test your connection (optional but recommended):

python test_connection.py

Obtaining Databricks Credentials

- Host: Your Databricks instance URL (e.g.,

your-instance.cloud.databricks.com) - Token: Create a personal access token in Databricks:

- Go to User Settings (click your username in the top right)

- Select “Developer” tab

- Click “Manage” under “Access tokens”

- Generate a new token, and save it immediately

- HTTP Path: For your SQL warehouse:

- Go to SQL Warehouses in Databricks

- Select your warehouse

- Find the connection details and copy the HTTP Path

Running the Server

Start the MCP server:

python main.py

You can test the MCP server using the inspector by running

npx @modelcontextprotocol/inspector python3 main.py

Available MCP Tools

The following MCP tools are available:

- run_sql_query(sql: str) - Execute SQL queries on your Databricks SQL warehouse

- list_jobs() - List all Databricks jobs in your workspace

- get_job_status(job_id: int) - Get the status of a specific Databricks job by ID

- get_job_details(job_id: int) - Get detailed information about a specific Databricks job

Example Usage with LLMs

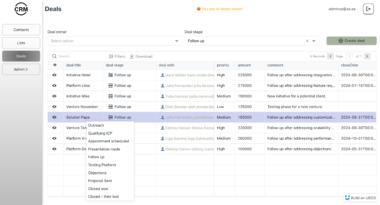

When used with LLMs that support the MCP protocol, this server enables natural language interaction with your Databricks environment:

- “Show me all tables in the database”

- “Run a query to count records in the customer table”

- “List all my Databricks jobs”

- “Check the status of job #123”

- “Show me details about job #456”

Troubleshooting

Connection Issues

- Ensure your Databricks host is correct and doesn’t include

https://prefix - Check that your SQL warehouse is running and accessible

- Verify your personal access token has the necessary permissions

- Run the included test script:

python test_connection.py

Security Considerations

- Your Databricks personal access token provides direct access to your workspace

- Secure your

.envfile and never commit it to version control - Consider using Databricks token with appropriate permission scopes only

- Run this server in a secure environment

Databricks MCP Server

Project Details

- JordiNeil/mcp-databricks-server

- Last Updated: 4/18/2025

Categories

Recomended MCP Servers

A Model Context Protocol (MCP) server for ATLAS, a Neo4j-powered task management system for LLM Agents - implementing...

MCP Server for AI Agent Marketplace Index from DeepNLP

A FastMCP server implementation for the Semantic Scholar API, providing comprehensive access to academic paper data, author information,...

MCP for https://votars.ai

A Model Control Protocol (MCP) server that allows Claude to communicate with locally running LLM models via LM...

MCP server that gives Claude ability to use OpenAI's GPTs assistants

MCP server for working with PDF files

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.