MCP Server: Revolutionizing AI Integration with Seamless LLM Connectivity

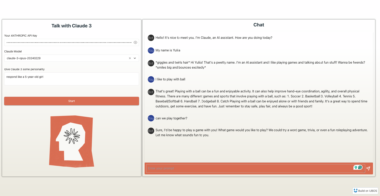

In the rapidly evolving landscape of artificial intelligence, the need for seamless integration with diverse Large Language Models (LLMs) is paramount. Enter the MCP Server, a model-agnostic Message Control Protocol server that serves as a bridge, enabling AI agents to interact effortlessly with various LLMs such as GPT, DeepSeek, Claude, and more. This unique capability positions MCP Server as an indispensable tool for businesses seeking to harness the power of AI-driven solutions.

Use Cases

The versatility of the MCP Server opens up a plethora of use cases across different industries:

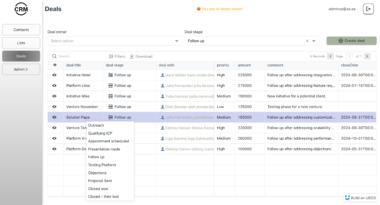

Enterprise AI Integration: Businesses can integrate multiple AI models into their workflows, allowing for enhanced decision-making and automation. Imagine a customer support system that seamlessly switches between models like GPT and Claude to provide accurate responses and handle complex queries.

AI-Driven Content Creation: Content creators can leverage the MCP Server to access various language models, ensuring diverse and creative outputs tailored to specific audiences. This is particularly beneficial for marketing campaigns that require unique and engaging content.

Research and Development: Researchers can utilize the MCP Server to experiment with different LLMs, facilitating comparative studies and innovation in AI technologies. This enables the development of cutting-edge solutions and advancements in machine learning.

Educational Tools: Educational platforms can integrate multiple LLMs to provide personalized learning experiences. By offering diverse perspectives and explanations, the MCP Server enhances the educational journey for students.

Key Features

The MCP Server is packed with features designed to streamline AI integration:

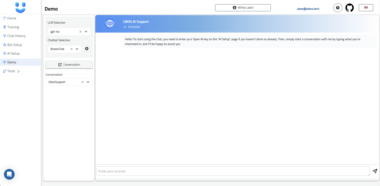

Unified Interface: The server provides a standardized interface to multiple LLM providers, including OpenAI (GPT models), Anthropic (Claude models), Google (Gemini models), and DeepSeek. This unification simplifies the process of switching between models or utilizing multiple models in a single application.

Customizable Parameters: Users can customize parameters such as temperature and max tokens, allowing for tailored outputs that meet specific needs. This flexibility ensures that the AI models can be fine-tuned for optimal performance.

Built with Pydantic AI: The server is constructed using Pydantic AI, ensuring type safety and validation. This robust framework enhances the reliability and efficiency of the MCP Server.

Usage Tracking and Metrics: The server provides comprehensive usage tracking and metrics, enabling businesses to monitor performance and make data-driven decisions.

Installation and Configuration

Installing the MCP Server is straightforward, with options for both automatic and manual installation. The integration with platforms like Smithery ensures a hassle-free setup, while the manual installation process is detailed and user-friendly.

Automatic Installation via Smithery: Users can install the MCP Server for Claude Desktop automatically, ensuring quick deployment and minimal setup time.

Manual Installation: The manual installation process involves cloning the repository and installing necessary dependencies like uv. Detailed instructions are provided to ensure a smooth installation experience.

Configuration: Users can configure the server by creating a

.envfile with API keys for different LLM providers. This customization ensures secure and efficient integration with various models.

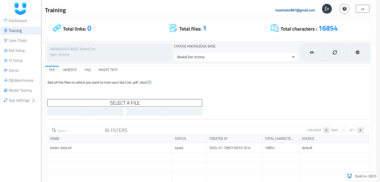

UBOS Platform: Empowering AI Agent Development

As a full-stack AI Agent Development Platform, UBOS is dedicated to bringing AI Agents to every business department. Our platform facilitates the orchestration of AI Agents, connecting them with enterprise data and enabling the creation of custom AI Agents using LLM models and Multi-Agent Systems. By leveraging the MCP Server, UBOS enhances its capability to deliver comprehensive AI solutions tailored to the unique needs of each business.

In conclusion, the MCP Server stands as a pivotal tool in the AI ecosystem, offering seamless integration with diverse LLMs and unlocking new possibilities for businesses across industries. Its robust features, ease of installation, and customization options make it an ideal choice for enterprises seeking to harness the power of AI-driven solutions.

LLM Bridge MCP

Project Details

- sjquant/llm-bridge-mcp

- MIT License

- Last Updated: 3/28/2025

Recomended MCP Servers

A Model Context Protocol (MCP) server that provides tools to interact with Folderr's API

A middleware server that enables multiple isolated instances of the same MCP servers to coexist independently with unique...

A MCP server in development for Google Scholar

An MCP server for interacting with the Financial Datasets stock market API.

A Model Context Protocol server that provides real-time hot trending topics from major Chinese social platforms and news...

A MCP server connecting to managed indexes on LlamaCloud

A Serper MCP Server

Serveur MCP avancé pour Firebase Firestore avec support pour toutes les fonctionnalités avancées

Connect APIs, remarkably fast. Free for developers.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.