MCP Chat Backend

This project is a serverless FastAPI backend for a chatbot that generates and executes SQL queries on a Postgres database using OpenAI’s GPT models, then returns structured, UI-friendly responses. It is designed to run on AWS Lambda via AWS SAM, but can also be run locally or in Docker.

Features

- FastAPI REST API with a single

/askendpoint - Uses OpenAI GPT models to generate and summarize SQL queries

- Connects to a Postgres (Supabase) database

- Returns structured JSON responses for easy frontend rendering

- CORS enabled for frontend integration

- Deployable to AWS Lambda (SAM), or run locally/Docker

- Verbose logging for debugging (CloudWatch)

Project Structure

├── main.py # Main FastAPI app and Lambda handler

├── requirements.txt # Python dependencies

├── template.yaml # AWS SAM template for Lambda deployment

├── samconfig.toml # AWS SAM deployment config

├── Dockerfile # For local/Docker deployment

├── .gitignore # Files to ignore in git

└── .env # (Not committed) Environment variables

Setup

1. Clone the repository

git clone <your-repo-url>

cd mcp-chat-3

2. Install Python dependencies

python -m venv .venv

source .venv/bin/activate # or .venvScriptsactivate on Windows

pip install -r requirements.txt

3. Set up environment variables

Create a .env file (not committed to git):

OPENAI_API_KEY=your-openai-key

SUPABASE_DB_NAME=your-db

SUPABASE_DB_USER=your-user

SUPABASE_DB_PASSWORD=your-password

SUPABASE_DB_HOST=your-host

SUPABASE_DB_PORT=your-port

Running Locally

With Uvicorn

uvicorn main:app --reload --port 8080

With Docker

docker build -t mcp-chat-backend .

docker run -p 8080:8080 --env-file .env mcp-chat-backend

Deploying to AWS Lambda (SAM)

- Install AWS SAM CLI

- Build and deploy:

sam build

sam deploy --guided

- Configure environment variables in

template.yamlor via the AWS Console. - The API will be available at the endpoint shown after deployment (e.g.

https://xxxxxx.execute-api.region.amazonaws.com/Prod/ask).

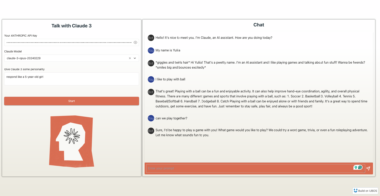

API Usage

POST /ask

- Body:

{ "question": "your question here" } - Response: Structured JSON for chatbot UI, e.g.

{

"messages": [

{

"type": "text",

"content": "Sample 588 has a resistance of 1.2 ohms.",

"entity": {

"entity_type": "sample",

"id": "588"

}

},

{

"type": "list",

"items": ["Item 1", "Item 2"]

}

]

}

- See

main.pyfor the full schema and more details.

Environment Variables

OPENAI_API_KEY: Your OpenAI API keySUPABASE_DB_NAME,SUPABASE_DB_USER,SUPABASE_DB_PASSWORD,SUPABASE_DB_HOST,SUPABASE_DB_PORT: Your Postgres database credentials

Development Notes

- All logs are sent to stdout (and CloudWatch on Lambda)

- CORS is enabled for all origins by default

- The backend expects the frontend to handle the structured response format

License

MIT (or your license here)

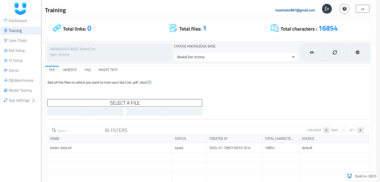

Chatbot SQL Query Backend

Project Details

- rick-noya/mcp-chatbot

- Last Updated: 4/25/2025

Recomended MCP Servers

A Model Context Protocol (MCP) server for converting files between different formats

Rest To Postman Collection MCP Server

pig 3.6 整合 ruoyi 3.8 前后端分离示意项目

MCP server for TouchDesigner

MCP Crew AI Server is a lightweight Python-based server designed to run, manage and create CrewAI workflows.

Break free of your MCP Client constraints

Penrose server for the Infinity-Topos environment

Capture live images from your webcam with a tool or resource request

An MCP Server for managing posts on Ghost CMS

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.