- Updated: March 17, 2024

- 6 min read

What is Retrieval-Augmented Generation (RAG): An AI Game-Change?

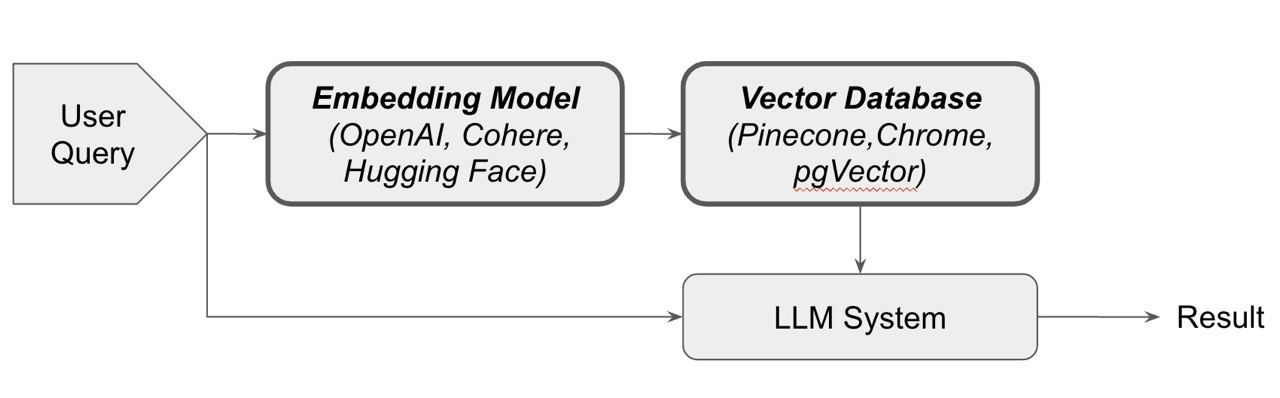

In the ever-evolving world of AI and technology, retrieval-augmented generation (RAG) has emerged as a revolutionary framework that is poised to transform the way we understand and interact with large language models (LLMs) and UBOS.tech is at the forefront of its implementation. At the heart of RAG is a simple yet powerful concept: grounding AI responses in solid, verifiable facts to enhance their overall reliability and trustworthiness.

Before we delve into the intricacies of RAG, it is crucial to understand the limitations inherent within LLMs. Despite their ability to produce intelligent and seemingly 'knowledgeable' responses, LLMs can often be inconsistent, producing answers that can range from highly accurate to randomly regurgitated facts from their training data. This is largely because LLMs understand words from a statistical perspective, but not their actual meaning.

"Large language models know how words relate statistically, but not what they mean."

This is precisely where retrieval-augmented generation comes into play, acting as a bridge between the vastness of external knowledge and the LLM's internal representation of information. By integrating an external knowledge source, RAG ensures the model always has access to the most current and reliable facts. This not only bolsters the quality of the LLM-generated responses but also provides users with a transparent view into the model's sources, thereby instilling a higher degree of trust in the generated outcomes.

The Power of Large Language Models through Visual Programming

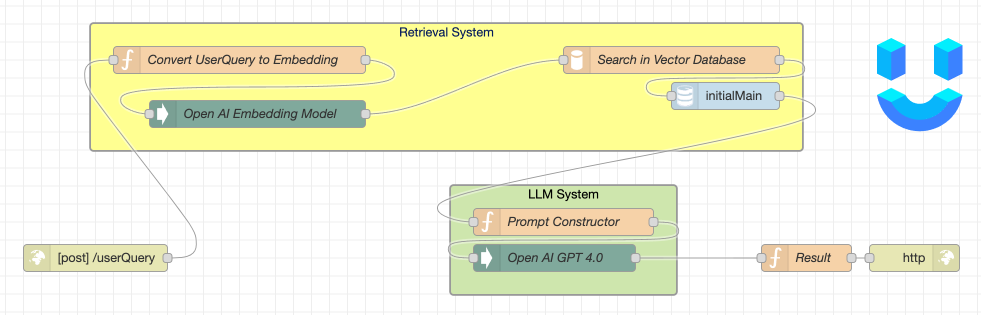

In the realm of visual programming, UBOS.tech has made significant strides, particularly in leveraging the power of large language models. Our approach to unleashing the power of large language models through visual programming aims to make the process of creating and deploying custom Generative AI solutions more accessible and efficient.

By offering an innovative low/no-code platform, we aim to simplify the application development process, allowing businesses and developers to build and deploy custom applications without extensive coding knowledge or specialized AI expertise. This, coupled with our foray into RAG, offers an unparalleled opportunity to harness the full potential of AI in a practical, user-friendly manner.

RAG and the Future of Low-Code Development Platforms

With the advent of RAG, the possibilities for low-code development platforms have significantly expanded. RAG's unique ability to retrieve and utilize factual information from external sources can dramatically increase the accuracy and reliability of applications developed on low-code platforms.

At UBOS.tech, our low-code platform is designed with a robust and user-friendly interface, enabling even the most non-technical users to create and deploy their own applications. By integrating RAG into this system, we are paving the way for more reliable, fact-checked applications that are not only easy to build but also incredibly effective.

Workflow Automation and Integrations

Another exciting potential for RAG lies in the realm of workflow automation and integrations. Given its ability to source and verify information from external databases, RAG can add a new layer of reliability to automated workflows and integrations, such as Telegram and ChatGPT.

For instance, an AI chatbot developed on UBOS.tech, powered by RAG, could provide more accurate and reliable responses to user queries. This could drastically improve user experience and trust in AI-powered solutions.

Implementing RAG Architecture in AI Bot Solution: A Step Towards Innovation

At UBOS.tech, innovation is at the core of everything we do. In our continued efforts to democratize AI, we are proud to announce that we have successfully implemented the RAG architecture in our AI Bot solution. The most exciting part? It's available for free on our platform!

With our AI Bot solution, you can clone and start developing your own solutions based on the RAG architecture and Large Language Models (LLMs). Not only does this significantly reduce the development time, but it also allows developers to leverage the power of RAG and LLMs without the need for extensive coding knowledge or specialized AI expertise.

The implementation of RAG architecture in our AI Bot solution is an integral part of our commitment to making AI more accessible and reliable. Whether you're a seasoned developer or a curious beginner, the UBOS platform offers a seamless and efficient way to harness the power of AI and RAG.

So why wait? Join us at UBOS.tech today and start your journey into the exciting world of AI development!

In Conclusion

The potential of Retrieval-Augmented Generation is immense, offering a promising future for AI development. From enhancing the quality of responses generated by Large Language Models to increasing the reliability of low-code applications and automated workflows, RAG is set to revolutionize the AI landscape.

At UBOS.tech, we are excited to be at the forefront of this movement, championing the integration of RAG into our low-code/no-code platform and empowering businesses and developers to create more reliable, fact-based AI solutions.

Andrii Bidochko

CEO/CTO at UBOS

Welcome! I'm the CEO/CTO of UBOS.tech, a low-code/no-code application development platform designed to simplify the process of creating custom Generative AI solutions. With an extensive technical background in AI and software development, I've steered our team towards a single goal - to empower businesses to become autonomous, AI-first organizations.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.