- Updated: March 17, 2024

- 10 min read

Unleashing the Power of Large Language Models through Visual Programming compare LangChain, LangFlow, Node-Red

Large Language Models (LLMs) offer transformative potential in the realm of AI technology, bolstering capabilities across various sectors. This article aims to take you on an exhaustive journey into the intricate process of chaining LLM prompts through visual programming.

Leveraging LLMs: An Overview

Harnessing the potential of LLMs has become a significant focus in AI development. These models, built on vast repositories of language data, possess an unparalleled ability to understand, generate, and even augment the human text. Their prowess extends beyond simple text generation, showcasing the capacity to formulate reasoned responses, answer complex queries, and facilitate an elevated user engagement level.

Unfolding the Magic: Chaining Prompts in Large Language Models

To optimize LLMs’ capabilities, the concept of chaining prompts becomes paramount. It enables the stitching together of multiple prompts, leveraging the model’s understanding to generate responses that reflect context-aware, coherent dialogue. It fosters a deeper engagement level, mimicking a more natural, conversation-like interaction.

graph TB; A[Large Language Model] --> B[First Prompt] B --> C[Generated Response] C --> D[Second Prompt] D --> E[Next Generated Response] E --> F[Third Prompt] F --> G[Further Generated Response]

Harnessing Visual Programming: Bringing the Power to You

Visual programming significantly aids in the prompt chaining process. This form of programming, focusing on the manipulation of program elements graphically rather than textually, allows developers to construct complex chaining scenarios more intuitively.

The Art of Chaining Prompts in LLMs

The real prowess of LLMs comes to the fore through chaining prompts, which helps create a more coherent, context-aware dialogue. This innovative method aligns multiple prompts, encouraging a more interactive engagement that resembles a natural conversation.

Comparative Analysis: LangChain, LangFlow UI, and Node-RED in UBOS.tech

Large Language Models (LLMs) are powerful assets in the AI realm, with their efficacy further enhanced through innovative chaining prompts strategies. Various tools facilitate this intricate process, each with its distinct merits and drawbacks. This segment provides a comprehensive comparison between the LangChain Python framework, LangFlow UI, and the Node-RED approach leveraged by UBOS.tech.

LangChain: The Python Approach

LangChain is a robust Python-based framework primarily designed for chaining prompts in LLMs. Its main strengths lie in its flexibility and versatility, thanks to Python’s extensive library support and wide user base.

Nevertheless, LangChain’s reliance on Python means that users must possess a solid grounding in the language. This requirement can be a barrier for non-technical users or those unfamiliar with Python.

LangFlow UI: Bridging the Gap

LangFlow UI attempts to bridge the gap between technical complexity and user-friendly interaction. This web-based interface is designed to make the prompt chaining process more accessible to a broader user base.

While LangFlow UI simplifies the interaction with LLMs, it can be less flexible for more complex chaining scenarios. It’s more suitable for simpler applications, and advanced users might find it limiting.

Node-RED in UBOS.tech: The Best of Both Worlds

Node-RED in UBOS.tech offers a unique blend of simplicity and power. This visual programming tool allows users to create and deploy complex chaining scenarios in a more intuitive, graphical manner, eliminating the need for extensive coding knowledge.

Paired with UBOS.tech, a platform designed for low-code AI solution development, Node-RED becomes a powerful tool for users of all expertise levels. It promotes a user-friendly approach without compromising on the flexibility and control offered by more traditional, code-intensive methods.

Beyond the Horizon: The Future of LLMs, Node-RED, and UBOS.tech

The amalgamation of LLMs, Node-RED, and UBOS.tech offers a glimpse into the future of AI. As we delve deeper into the potential of these technologies, we inch closer to a revolution in human-computer interaction.

In conclusion, the combination of LLMs, visual programming via Node-RED, and the UBOS.tech platform offers a transformative solution for AI-powered applications. By capitalizing on these technologies, we unlock a future where AI is not merely a tool but a game-changer in our daily lives.

Examples

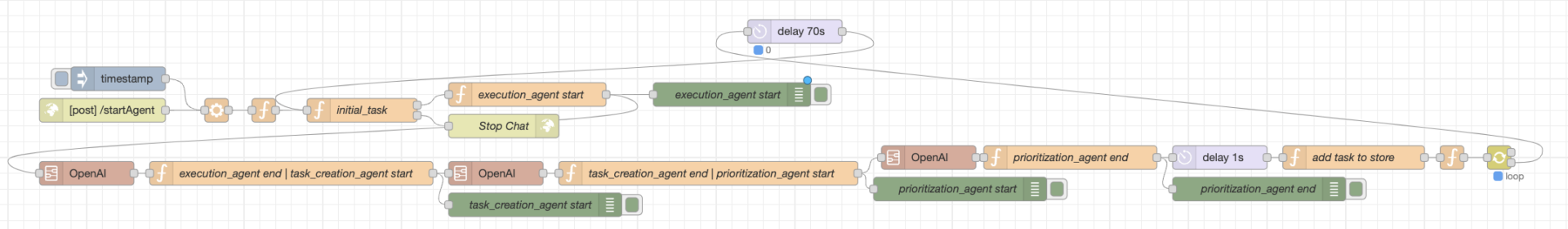

Example 1: Creating Autonomous Agents – BabeAGI & AutoGPT

The creation of autonomous agents represents a significant milestone in AI development. These agents, acting independently, can accomplish intricate tasks, make informed decisions, and deliver sophisticated solutions. A prime example of this application is seen in autonomous agents such as BabeAGI and AutoGPT.

The process involves chaining multiple prompts and running them in a loop with an LLM. This strategy fosters a continuous, coherent, and context-aware dialogue, providing a more interactive and natural user experience. The procedure commences with an initial prompt, followed by evaluating the LLM’s response and iterating this process for subsequent prompts.

The autonomy and adaptability demonstrated by BabeAGI and AutoGPT are testaments to the potential of this approach. Aided by Node-RED in UBOS.tech, users can construct intricate chaining scenarios, establishing autonomous agents capable of delivering solutions tailored to specific use cases.

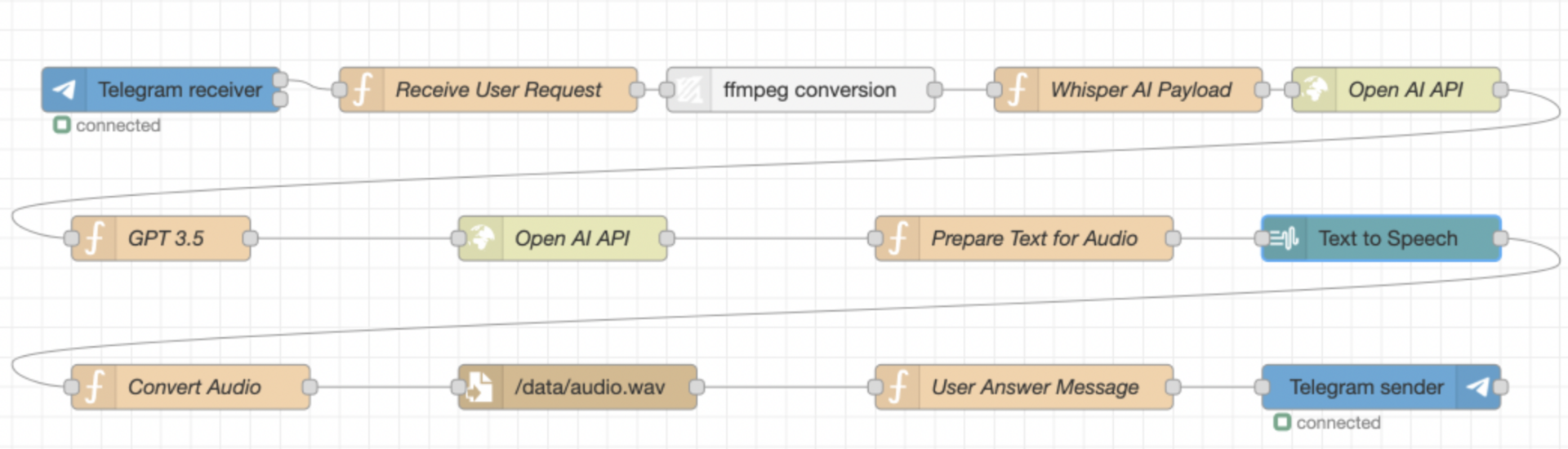

Example 2: Creating a Voice-Based Two-Way Chat with ChatGPT via Telegram using ElevenLabs API

Another innovative application of Large Language Models (LLMs) involves establishing a voice-based two-way chat via Telegram, facilitated by ChatGPT, a popular LLM developed by OpenAI. The unique aspect here is the implementation of the ElevenLabs API, a powerful service for converting text to speech.

In this setup, users can verbally converse with ChatGPT, with the AI’s text responses being converted back into voice, thus simulating a natural, human-like conversation.

Here’s how the process unfolds: The user’s voice input is transcribed into text using Whisper AI’s cutting-edge speech-to-text technology. ChatGPT processes this text, generating an appropriate textual response. The ElevenLabs API then converts this text response into voice. This voice response is delivered back to the user via Telegram, thereby completing a full cycle of voice-based interaction.

This entire operation can be streamlined effortlessly using Node-RED on UBOS.tech. Users can create flows that manage each step of the interaction, from transcribing speech-to-text with Whisper AI and invoking ChatGPT, to converting text-to-speech using ElevenLabs API.

Andrii Bidochko

CEO/CTO at UBOS

Welcome! I'm the CEO/CTO of UBOS.tech, a low-code/no-code application development platform designed to simplify the process of creating custom Generative AI solutions. With an extensive technical background in AI and software development, I've steered our team towards a single goal - to empower businesses to become autonomous, AI-first organizations.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.