Overview of UBOS Asset Marketplace for MCP Servers

In the rapidly evolving world of artificial intelligence, the need for seamless integration and efficient management of AI models and tools is paramount. The UBOS Asset Marketplace for MCP Servers offers a cutting-edge solution for businesses looking to streamline their AI operations. This platform is designed to support the integration of Model Context Protocol (MCP) servers, which act as a bridge, enabling AI models to access and interact with external data sources and tools.

What is MCP Server?

MCP, or Model Context Protocol, is an open protocol that standardizes how applications provide context to language models (LLMs). The MCP server facilitates this by allowing AI models to interact with various data sources and tools, thereby enhancing their functionality and application.

Key Features of MCP Servers

- Dynamic Tool Exposure: The LowLevel mode dynamically creates tools for each chatflow or assistant, ensuring a flexible and adaptive environment.

- Simpler Configuration: FastMCP mode offers a straightforward setup with static tools for listing chatflows and creating predictions.

- Flexible Filtering: Both modes support filtering chatflows via whitelists and blacklists by IDs or names, enhancing customization.

- MCP Integration: Seamlessly integrates into MCP workflows, providing a cohesive and efficient operational framework.

Use Cases

Enterprise AI Integration: Businesses can leverage MCP servers to integrate AI models with their existing data systems, enabling more intelligent and context-aware applications.

Custom AI Development: Developers can build and deploy custom AI agents using the UBOS platform, utilizing MCP servers to enhance their capabilities.

Real-time Data Interaction: MCP servers allow AI models to interact with real-time data sources, providing up-to-date insights and analytics.

Installation and Configuration

Installing the mcp-flowise package is straightforward. It supports two operation modes: LowLevel Mode, which dynamically registers tools, and FastMCP Mode, suitable for simpler configurations. Both modes offer flexible filtering options and seamless integration into MCP workflows.

Installation via Smithery

To install mcp-flowise for Claude Desktop automatically, use the Smithery CLI:

npx -y @smithery/cli install @matthewhand/mcp-flowise --client claude

Prerequisites

- Python 3.12 or higher

uvxpackage manager

Running on Windows

For Windows users, if issues arise with --from git+https, clone the repository locally and configure the mcpServers with the full path to uvx.exe and the cloned repository.

Security and Best Practices

- Protect Your API Key: Ensure the

FLOWISE_API_KEYis secure and not exposed in logs or repositories. - Environment Configuration: Utilize

.envfiles or environment variables for sensitive configurations.

UBOS Platform

UBOS is a full-stack AI Agent Development Platform focused on bringing AI Agents to every business department. Our platform helps you orchestrate AI Agents, connect them with your enterprise data, and build custom AI Agents with your LLM model and Multi-Agent Systems. By integrating MCP servers, UBOS enhances the ability to deploy AI solutions that are both intelligent and contextually aware.

The UBOS Asset Marketplace for MCP Servers is not just a tool; it’s a gateway to a more integrated and efficient AI-driven future. By providing a standardized protocol for AI models to interact with external data, it empowers businesses to harness the full potential of AI technologies.

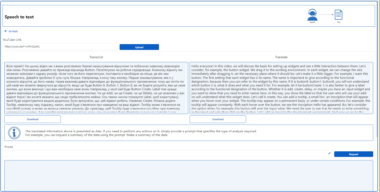

Flowise API

Project Details

- matthewhand/mcp-flowise

- MIT License

- Last Updated: 4/8/2025

Recomended MCP Servers

Connect Rhino3D to Claude AI via the Model Context Protocol

A Model Context Protocol (MCP) server that helps read GitHub repository structure and important files.

A Model Context Protocol (MCP) server for reading Excel files with automatic chunking and pagination support. Built with...

Provides summarised output from various actions that could otherwise eat up tokens and cause crashes for AI agents

cloudflare workers MCP server

An mcp server to inject raw chain of thought tokens from a reasoning model.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.