DeepView MCP

DeepView MCP is a Model Context Protocol server that enables IDEs like Cursor and Windsurf to analyze large codebases using Gemini’s extensive context window.

Features

- Load an entire codebase from a single text file (e.g., created with tools like repomix)

- Query the codebase using Gemini’s large context window

- Connect to IDEs that support the MCP protocol, like Cursor and Windsurf

- Configurable Gemini model selection via command-line arguments

Prerequisites

- Python 3.13+

- Gemini API key from Google AI Studio

Installation

Using pip

pip install deepview-mcp

Usage

Starting the Server

Note: you don’t need to start the server manually. These parameters are configured in your MCP setup in your IDE (see below).

# Basic usage with default settings

deepview-mcp [path/to/codebase.txt]

# Specify a different Gemini model

deepview-mcp [path/to/codebase.txt] --model gemini-2.0-pro

# Change log level

deepview-mcp [path/to/codebase.txt] --log-level DEBUG

The codebase file parameter is optional. If not provided, you’ll need to specify it when making queries.

Command-line Options

--model MODEL: Specify the Gemini model to use (default: gemini-2.0-flash-lite)--log-level {DEBUG,INFO,WARNING,ERROR,CRITICAL}: Set the logging level (default: INFO)

Using with an IDE (Cursor/Windsurf/…)

- Open IDE settings

- Navigate to the MCP configuration

- Add a new MCP server with the following configuration:

{ "mcpServers": { "deepview": { "command": "/path/to/deepview-mcp", "args": [], "env": { "GEMINI_API_KEY": "your_gemini_api_key" } } } }

Setting a codebase file is optional. If you are working with the same codebase, you can set the default codebase file using the following configuration:

{

"mcpServers": {

"deepview": {

"command": "/path/to/deepview-mcp",

"args": ["/path/to/codebase.txt"],

"env": {

"GEMINI_API_KEY": "your_gemini_api_key"

}

}

}

}

Here’s how to specify the Gemini version to use:

{

"mcpServers": {

"deepview": {

"command": "/path/to/deepview-mcp",

"args": ["--model", "gemini-2.5-pro-exp-03-25"],

"env": {

"GEMINI_API_KEY": "your_gemini_api_key"

}

}

}

}

- Reload MCP servers configuration

Available Tools

The server provides one tool:

deepview: Ask a question about the codebase- Required parameter:

question- The question to ask about the codebase - Optional parameter:

codebase_file- Path to a codebase file to load before querying

- Required parameter:

Preparing Your Codebase

DeepView MCP requires a single file containing your entire codebase. You can use repomix to prepare your codebase in an AI-friendly format.

Using repomix

- Basic Usage: Run repomix in your project directory to create a default output file:

# Make sure you're using Node.js 18.17.0 or higher

npx repomix

This will generate a repomix-output.xml file containing your codebase.

- Custom Configuration: Create a configuration file to customize which files get packaged and the output format:

npx repomix --init

This creates a repomix.config.json file that you can edit to:

- Include/exclude specific files or directories

- Change the output format (XML, JSON, TXT)

- Set the output filename

- Configure other packaging options

Example repomix Configuration

Here’s an example repomix.config.json file:

{

"include": [

"**/*.py",

"**/*.js",

"**/*.ts",

"**/*.jsx",

"**/*.tsx"

],

"exclude": [

"node_modules/**",

"venv/**",

"**/__pycache__/**",

"**/test/**"

],

"output": {

"format": "xml",

"filename": "my-codebase.xml"

}

}

For more information on repomix, visit the repomix GitHub repository.

License

MIT

Author

Dmitry Degtyarev (ddegtyarev@gmail.com)

DeepView

Project Details

- ai-1st/deepview-mcp

- MIT License

- Last Updated: 4/13/2025

Categories

Recomended MCP Servers

PowerPlatform Model Context Protocol server

Collection of apple-native tools for the model context protocol.

FalkorDB MCP Server

Integrate Arduino-based robotics (using the NodeMCU ESP32 or Arduino Nano 368 board) with AI using the MCP (Model...

An MCP server for interacting with the Financial Datasets stock market API.

Solana Model Context Protocol (MCP) Demo

MCP server for MS SQL Server

An MCP service for Ant Design components query | 一个 Ant Design 组件查询的 MCP 服务,包含组件文档、API 文档、代码示例和更新日志查询

MCP server for Oura API integration

An MCP Server to enable global access to Rememberizer

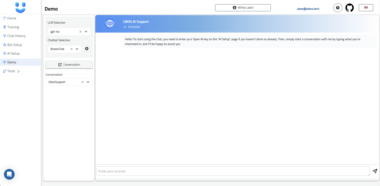

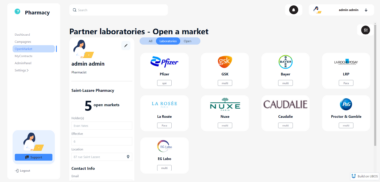

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.

From vibe coding to vibe deployment. UBOS MCP turns ideas into infra with one message.